MG400开发日志

1 MG400

MG400是越疆科技公司开发的一款4自由度机械臂.

2 开发接口

官方提供了基于TCP/IP协议的API:

TCP端口说明

29999端口(简称Dashboard端口)接收到客户端约定消息格式后会将结果反馈客户端30003端口用于机器人控制,单位 mm30004端口(简称实时反馈端口)每8ms反馈机器人的信息30005服务器端口每200ms反馈机器人的信息30006端口为可配置的反馈机器人信息端口(默认为每50ms反馈)

3 用户坐标系和工具坐标系

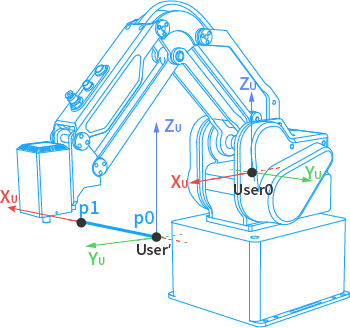

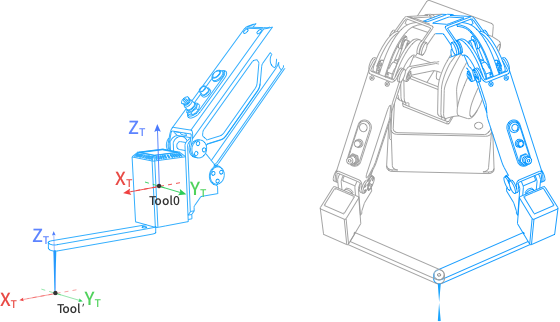

默认的用户坐标系和工具坐标系

简单解释用户坐标系和工具坐标系就是:

- 用户坐标系表示参考系 Source

- 工具坐标系表示目标系 Target

注:MG400设置工具坐标系有Bug,固件版本 1.5.6!

那么用户坐标系与工具坐标系就能定义 SE(3) 空间的变换关系,常用变换矩阵 T_4x4 表示,最终转换为机械臂的控制输入量,比如目标位姿。MG400 支持用户自定义用户坐标系和工具坐标系,从而简化用户程序。

4 常用控制指令

MovJ/MovL: 移动至欧式空间的目标点JointMovJ: 移动至关节空间的目标点

5 部署方法

4.1 标定相机内参

采集完整棋盘格图像

import cv2

vid = cv2.VideoCapture(2)

vid.set(cv2.CAP_PROP_FRAME_WIDTH, 1920)

vid.set(cv2.CAP_PROP_FRAME_HEIGHT, 1080)

count = 0

while True and count <= 10:

ret, frame = vid.read()

cv2.imshow("frame", frame)

if cv2.waitKey(100) & 0xFF == ord("q"):

cv2.imwrite("img_" + str(count)+".jpg", frame)

count = count+1

continue

vid.release()

cv2.destroyAllWindows()

调用 OpenCV 标定

import numpy as np

import cv2 as cv

import glob

# termination criteria

criteria = (cv.TERM_CRITERIA_EPS + cv.TERM_CRITERIA_MAX_ITER, 30, 0.001)

# prepare object points, like (0,0,0), (1,0,0), (2,0,0) ....,(6,5,0)

objp = np.zeros((11 * 8, 3), np.float32)

objp[:, :2] = np.mgrid[0:11, 0:8].T.reshape(-1, 2)

# Arrays to store object points and image points from all the images.

objpoints = [] # 3d point in real world space

imgpoints = [] # 2d points in image plane.

images = glob.glob("*.jpg")

for fname in images:

img = cv.imread(fname)

gray = cv.cvtColor(img, cv.COLOR_BGR2GRAY)

# Find the chess board corners

ret, corners = cv.findChessboardCorners(gray, (11, 8), None)

# If found, add object points, image points (after refining them)

if ret == True:

objpoints.append(objp)

corners2 = cv.cornerSubPix(gray, corners, (11, 11), (-1, -1), criteria)

imgpoints.append(corners)

# Draw and display the corners

cv.drawChessboardCorners(img, (11, 8), corners2, ret)

cv.imshow("img", img)

cv.waitKey(5)

cv.destroyAllWindows()

ret, mtx, dist, rvecs, tvecs = cv.calibrateCamera(

objpoints, imgpoints, gray.shape[::-1], None, None

)

print("\nRMS:", ret)

print("camera matrix:\n", mtx)

print("distortion coefficients: ", dist.ravel())

相机内参标定结果

RMS: 0.11316095150001748

camera matrix:

[[872.23857606 0. 955.39501163]

[ 0. 872.02928117 469.38217614]

[ 0. 0. 1. ]]

distortion coefficients: [-6.09149294e-03 -1.06957983e-02 1.72208316e-05 5.13094084e-05 -4.37856802e-04]

4.2 用因子图实现SE(2)手眼标定

常用的手眼标定算法本质上是在SE(3)空间里求解 AX=XB 方程。因为 MG400 只有4个自由度,简单起见只在SE(2)空间标定。

为了避免求解非线性方程,采用基于因子图的优化方法实现手眼标定。采用 SymForce(快速符号计算与优化框架) 实现:

from symforce import sympy as sm

from symforce import geo

from symforce import typing as T

def aruco_residual(

T_base_tag: geo.Pose2, T_tool_cam : geo.Pose2, measure_base_tool : geo.Pose2, measure_cam_tag : geo.Pose2, epsilon : T.Scalar

) -> geo.V3:

T_base_tag_predicted = measure_base_tool * T_tool_cam * measure_cam_tag

tangent_vec = (T_base_tag_predicted * T_base_tag.inverse()).to_tangent(epsilon=epsilon)

return geo.V3(tangent_vec)

from symforce.opt.factor import Factor

num_measure = 5

factors = []

for i in range(num_measure):

factors.append(Factor(

residual=aruco_residual,

keys=[f"T_base_tag", f"T_tool_cam", f"measure_base_tool[{i}]", f"measure_cam_tag[{i}]", "epsilon"]

))

from symforce.opt.optimizer import Optimizer

optimizer = Optimizer(

factors=factors,

optimized_keys=[f"T_base_tag", f"T_tool_cam"],

debug_stats=True

)

import numpy as np

from symforce.values import Values

initial_values = Values(

T_base_tag=geo.Pose2(R = geo.Rot2.identity(), t = geo.Vector2(0.39, 0)),

T_tool_cam=geo.Pose2(R = geo.Rot2.from_angle(-2.73144), t = geo.Vector2(-0.0287074, -0.0342912)),

measure_base_tool=[

geo.Pose2(R = geo.Rot2.from_angle(2.88934), t = geo.Vector2(0.35113, 0.0385629)),

geo.Pose2(R = geo.Rot2.from_angle(2.88944), t = geo.Vector2(0.351131,0.0385618)),

geo.Pose2(R = geo.Rot2.from_angle(2.72775), t = geo.Vector2(0.353217, -0.0041673)),

geo.Pose2(R = geo.Rot2.from_angle(2.49733), t = geo.Vector2(0.34512 ,-0.0753108)),

geo.Pose2(R = geo.Rot2.from_angle(1.98388), t = geo.Vector2(0.351387, -0.0361516))

],

measure_cam_tag=[

geo.Pose2(R = geo.Rot2.from_angle(-0.147354), t = geo.Vector2(-0.00381149, -0.0745884)),

geo.Pose2(R = geo.Rot2.from_angle(-0.148410), t = geo.Vector2(-0.0038226, -0.0746155)),

geo.Pose2(R = geo.Rot2.from_angle(0.0124934), t = geo.Vector2(0.00169984, -0.0248769)),

geo.Pose2(R = geo.Rot2.from_angle(0.2473300), t = geo.Vector2(-0.00783419, 0.0562505)),

geo.Pose2(R = geo.Rot2.from_angle(0.7639740), t = geo.Vector2(-0.0282627, 0.0315999))

],

epsilon=sm.default_epsilon

)

result = optimizer.optimize(initial_values)

print("initial_values", result.initial_values)

print("optimized_values", result.optimized_values)

标定结果

initial_values:

T_base_tag: <Pose2 R=<Rot2 <C real=1, imag=0>>, t=(0.39, 0)>

T_tool_cam: <Pose2 R=<Rot2 <C real=-0.917059962986112, imag=-0.398749325125336>>, t=(-0.0287074, -0.0342912)>

optimized_values:

T_base_tag: <Pose2 [0.9999997948860889, -0.0006404902656193984, 0.3957076122309438, -0.009644956111152432]>

T_tool_cam: <Pose2 [-0.9219164600501261, -0.38738874620804986, -0.030012402591907537, -0.03395704905415254]>

4.3 控制流程

TODO

- Sync

- Collision Detection using Spring

- BehaviorTree.CPP

References